Navigating Ethical and Safety Challenges in Autonomous Systems

As autonomous systems evolve, so do the ethical and safety challenges they bring. Learn how robotics and AI innovators are tackling these critical issues.

November 9, 2025

Overview

Autonomous systems, from self-driving cars to humanoid robots, are reshaping industries and redefining how we interact with technology. However, with great innovation comes great responsibility. Ethical dilemmas and safety concerns are at the forefront of discussions surrounding these systems. How can we ensure that autonomous systems make decisions that align with human values while operating safely in complex environments?

This article dives into the ethical and safety challenges faced by autonomous systems and explores the strategies and frameworks being developed to address them.

Technology Breakdown

Autonomous systems rely on artificial intelligence (AI), machine learning (ML), and sensor technologies to operate independently. Their decision-making capabilities are governed by algorithms that process data and respond to environmental stimuli. While this enables them to perform tasks without human intervention, it also introduces unique challenges:

- Bias in Algorithms: AI systems learn from data, and if the training data is biased, the system's decisions will reflect that bias. For instance, facial recognition software has faced criticism for racial and gender biases.

- Unpredictable Behavior: Autonomous systems can encounter edge cases—situations not accounted for during development—which may lead to unpredictable or unsafe outcomes.

- Data Privacy: Many autonomous systems, such as delivery drones or autonomous vehicles, collect large amounts of data about their environment, raising concerns about surveillance and data misuse.

- Accountability: In the event of a failure or accident, determining who is responsible—whether it’s the manufacturer, programmer, or user—can be highly complex.

Case Study: Tesla Autopilot

Tesla's Autopilot system has faced scrutiny for accidents involving its vehicles. These incidents often occur because users overestimate the system's capabilities, treating it as a fully autonomous driver rather than a driver-assist feature. This highlights the critical need for clear communication about system limitations and the importance of continuous safety testing.

Industry Impact

The ethical and safety challenges of autonomous systems have far-reaching implications for industries and society. Here’s how they are being addressed:

1. Regulatory Frameworks

Governments and international organizations are working to establish standards and regulations for the development and deployment of autonomous systems. For example:

- The European Union’s Ethics Guidelines for Trustworthy AI outline principles like transparency, accountability, and human oversight.

- The U.S. Department of Transportation has issued guidelines for the testing and deployment of autonomous vehicles.

2. Ethical AI Development

Companies are investing in ethical AI initiatives to mitigate bias and ensure fairness. For example:

- Google’s AI Principles emphasize avoiding bias and ensuring accountability.

- The nonprofit Partnership on AI brings together tech companies, researchers, and policymakers to address ethical concerns in AI development.

3. Safety Innovations

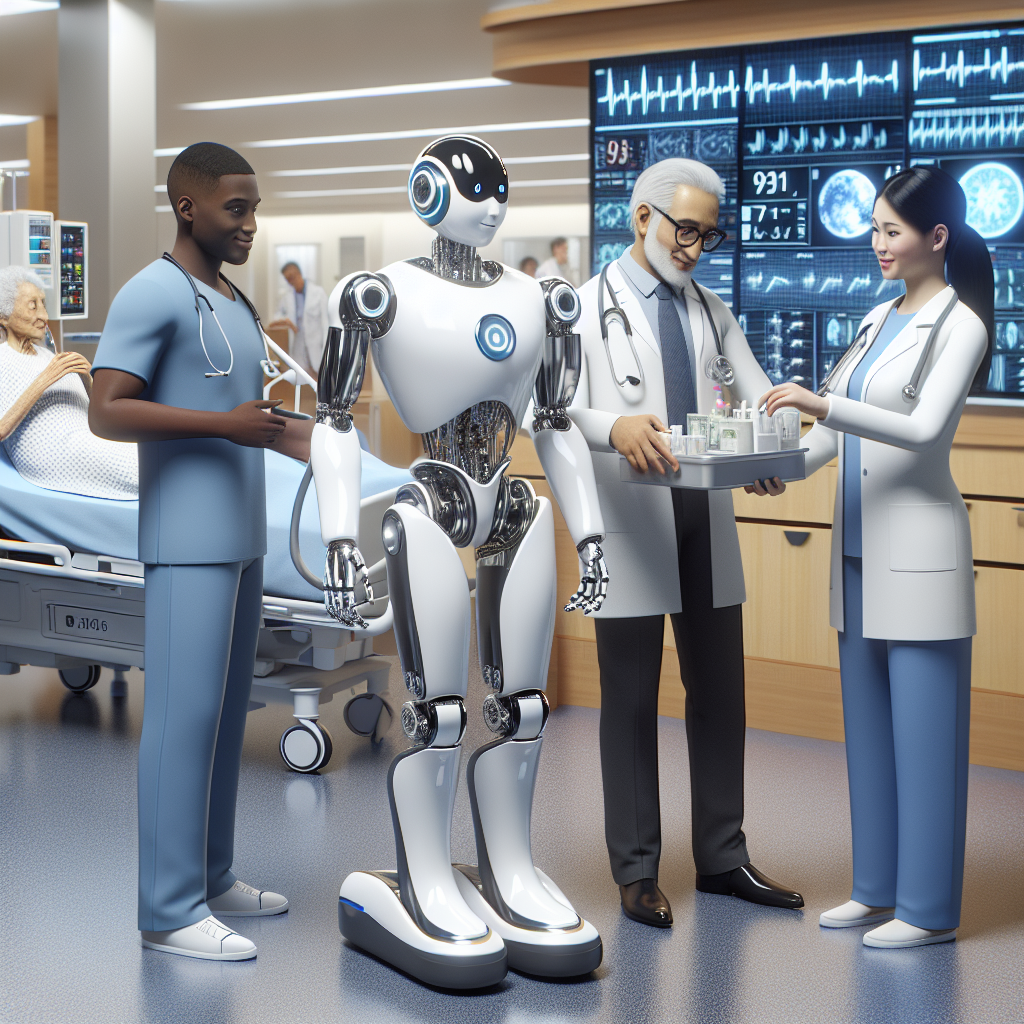

Safety is a top priority for autonomous systems, particularly in high-stakes applications like healthcare and transportation. Innovations include:

- Redundant Systems: Many autonomous vehicles use redundant sensors (e.g., LIDAR, radar, and cameras) to ensure reliability.

- Simulated Environments: Companies like Waymo use virtual simulations to test their systems in millions of scenarios before deploying them in the real world.

- Fail-Safe Mechanisms: Robots and autonomous machines are designed to revert to a safe state in case of failure, such as stopping movement when an anomaly is detected.

Visual Highlights

To better understand the ethical and safety considerations of autonomous systems, let’s explore some key visuals:

Conclusion

As autonomous systems continue to evolve, so too must our approaches to managing their ethical and safety challenges. By prioritizing transparency, accountability, and rigorous safety testing, we can harness the immense potential of these technologies while minimizing risks. Whether you're a developer, policymaker, or enthusiast, understanding these challenges is essential to shaping a future where humans and machines coexist harmoniously.

The journey toward ethical and safe autonomous systems is a collaborative effort. It requires input from technologists, ethicists, regulators, and the public. Together, we can navigate these challenges and unlock the transformative potential of autonomous technology.

Related Topics

- Ethical AI development

- Robotics regulations

- Safety in autonomous vehicles

Related Articles

The Future of Humanoid Robotics: Exploring Technology and Impact

Humanoid robotics is transforming industries with advanced AI, motion control, and real-world applications. Discover the latest innovations and trends.

Read MoreThe Future of Humanoid Robotics: Bridging Innovation and Reality

Explore how humanoid robotics technology is transforming industries, from cutting-edge motion control to real-world applications in healthcare and manufacturing.

Read MoreHumanoid Robotics: Pioneering the Future of Human-Robot Collaboration

Discover how humanoid robotics is revolutionizing industries, blending cutting-edge AI with advanced engineering to redefine human-robot interaction.

Read MoreReady to Explore Humanoid Robots?

Check out our robot showcase to see detailed specs, capabilities, and videos

Explore Robots